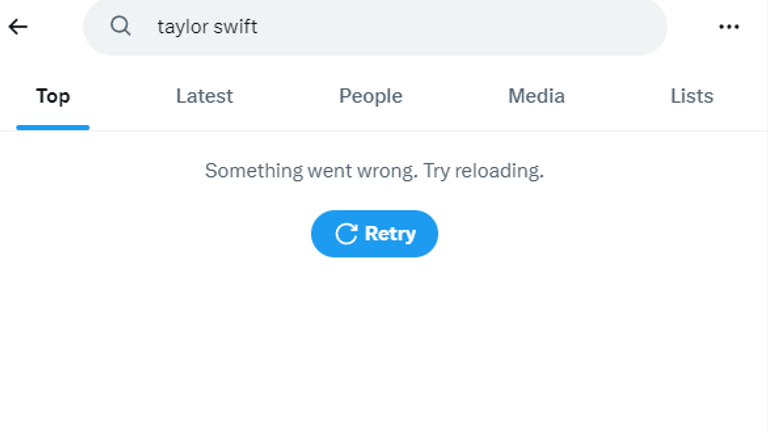

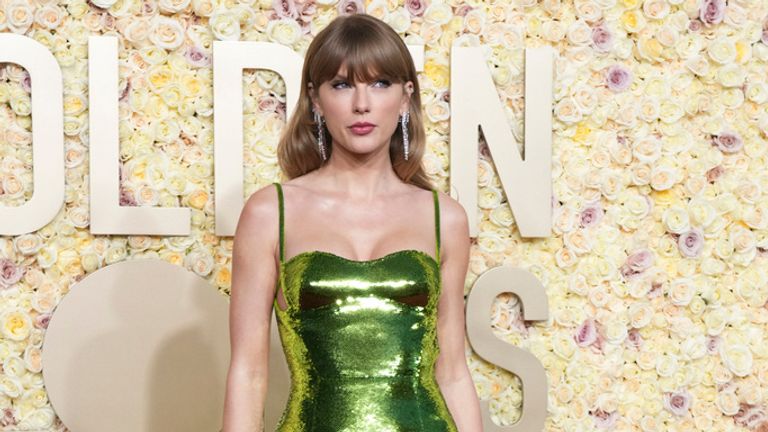

Searching for Taylor Swift’s name on X yields no results days after the pop star fell victim to deepfake sexual pictures circulating on the website.

“Something went wrong. Try reloading” appears when Swift’s name is typed into the search box.

Sky News approached X for comment.

On Friday President Joe Biden’s spokesperson said the fake, sexually explicit images of the star were “very alarming”.

White House Press Secretary Karine Jean-Pierre said social media companies have “an important role to play in enforcing their own rules”, as she urged Congress to legislate on the issue.

Microsoft CEO Satya Nadella admitted the company needs to “move fast” to combat the kind of images that circulated on social media, in comments to Sky News’s US partner network NBC.

The fake images of the pop star, believed to have been made using artificial intelligence (AI), were spread widely this week, with one picture on X viewed 47 million times before the account was suspended.

The group Reality Defender, which detects deepfakes, said it tracked a deluge of nonconsensual pornographic material depicting Swift particularly on X, but also on Meta-owned Facebook and other social media platforms.

The researchers found several dozen different AI-generated images. The most widely shared were football-related, showing a painted or bloodied Swift that objectified her and in some cases inflicted violent harm on the deepfake version of her.

Ms Jean-Pierre said: “We’re going to do what we can to deal with this issue.

“So while social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation, and non-consensual, intimate imagery of real people.”

The spokesperson also added that lax enforcement against false images too often disproportionately affects women.

‘We have to act’

Mr Nadella said the images “absolutely” set alarm bells ringing and said the fact this kind of content can be created and spread “behooves” platforms to “move fast”.

“I think we all benefit when the online world is a safe world, and so I don’t think anyone would want an online world that is completely not safe for both content creators and content consumers,” he added.

“We have to act.”

404 Media reported the deepfake images were traced back to a Telegram group chat, where members said they used Microsoft’s generative-AI tool, Designer.

Sky News has not independently verified that claim, but Microsoft said in a statement it was investigating the reports and would take appropriate action to address them.

Asked what can be done about the issue of fake images more generally, Mr Nadella said: “I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced.

“And there’s a lot to be done and a lot being done there.”

He added more can be done with law enforcement and tech platforms coming together.

Social media sites condemn ‘violations’

Researchers have said the number of explicit deepfakes has increased in recent years, as the technology used to produce such images has become more accessible and easier to use.

In 2019, a report released by the AI firm DeepTrace Labs found that explicit images were overwhelmingly weaponised against women.

Most of the victims were Hollywood actors and South Korean K-pop singers, the report said.

X wrote in a post on the site on Friday that they are “actively removing all identified images and taking appropriate actions against the accounts responsible for posting them.

“We’re closely monitoring the situation to ensure that any further violations are immediately addressed, and the content is removed.”

Read more:

Sharing explicit ‘deepfakes’ without consent to be a crime

Man charged with harassment and stalking near Swift’s home

Scarlett Johansson is latest victim of alleged deepfake advert

Meanwhile, Meta said in a statement that it strongly condemns “the content that has appeared across different internet services” and has worked to remove it.

“We continue to monitor our platforms for this violating content and will take appropriate action as needed,” the company said.

Taylor Swift’s representatives did not respond to a Sky News request for comment.

Tops Top News Online Real News Portal

Tops Top News Online Real News Portal